nkapila6/mcp-local-ragUpdated 21 days ago023

nkapila6/mcp-local-ragUpdated 21 days ago023

Remote#web search#local server#RAGLicense: NoneLanguage: Python

mcp-local-rag

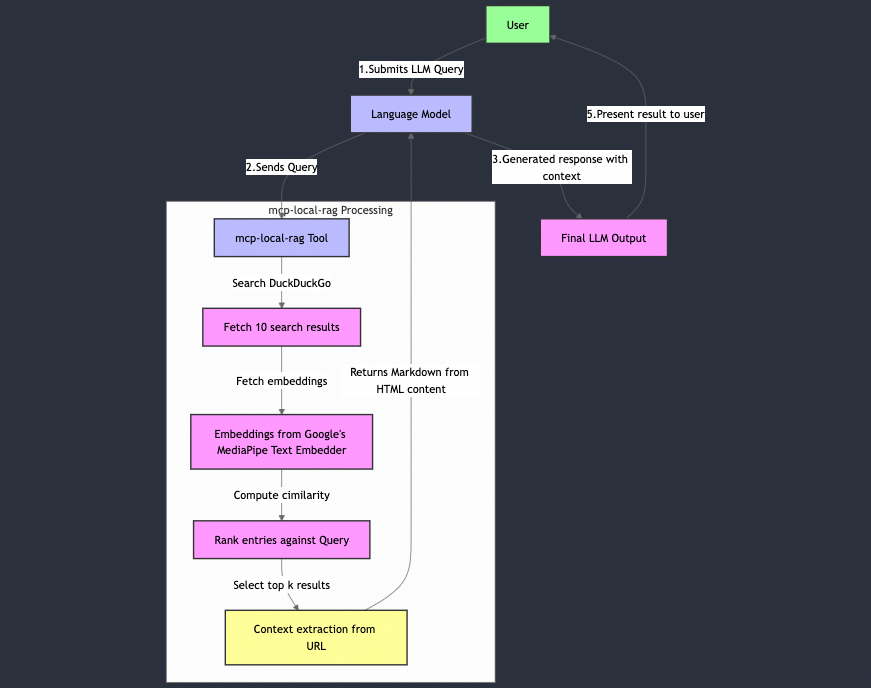

"primitive" RAG-like web search model context protocol (MCP) server that runs locally. ✨ no APIs ✨

Installation instructions

- You would need to install

uv: https://docs.astral.sh/uv/

If you do not want to clone in Step 2.

Just paste this directly into Claude config. You can find the configuration paths here: https://modelcontextprotocol.io/quickstart/user

{

"mcpServers": {

"mcp-local-rag":{

"command": "uvx",

"args": [

"--python=3.10",

"--from",

"git+https://github.com/nkapila6/mcp-local-rag",

"mcp-local-rag"

]

}

}

}

Otherwise:

- Clone this GitHub repository (OPTIONAL, can be skipped with above config)

git clone https://github.com/nkapila6/mcp-local-rag

- Add the following to your Claude config. You can find the configuration paths here: https://modelcontextprotocol.io/quickstart/user

{

"mcpServers": {

"mcp-local-rag": {

"command": "uv",

"args": [

"--directory",

"<path where this folder is located>/mcp-local-rag/",

"run",

"src/mcp_local_rag/main.py"

]

}

}

}

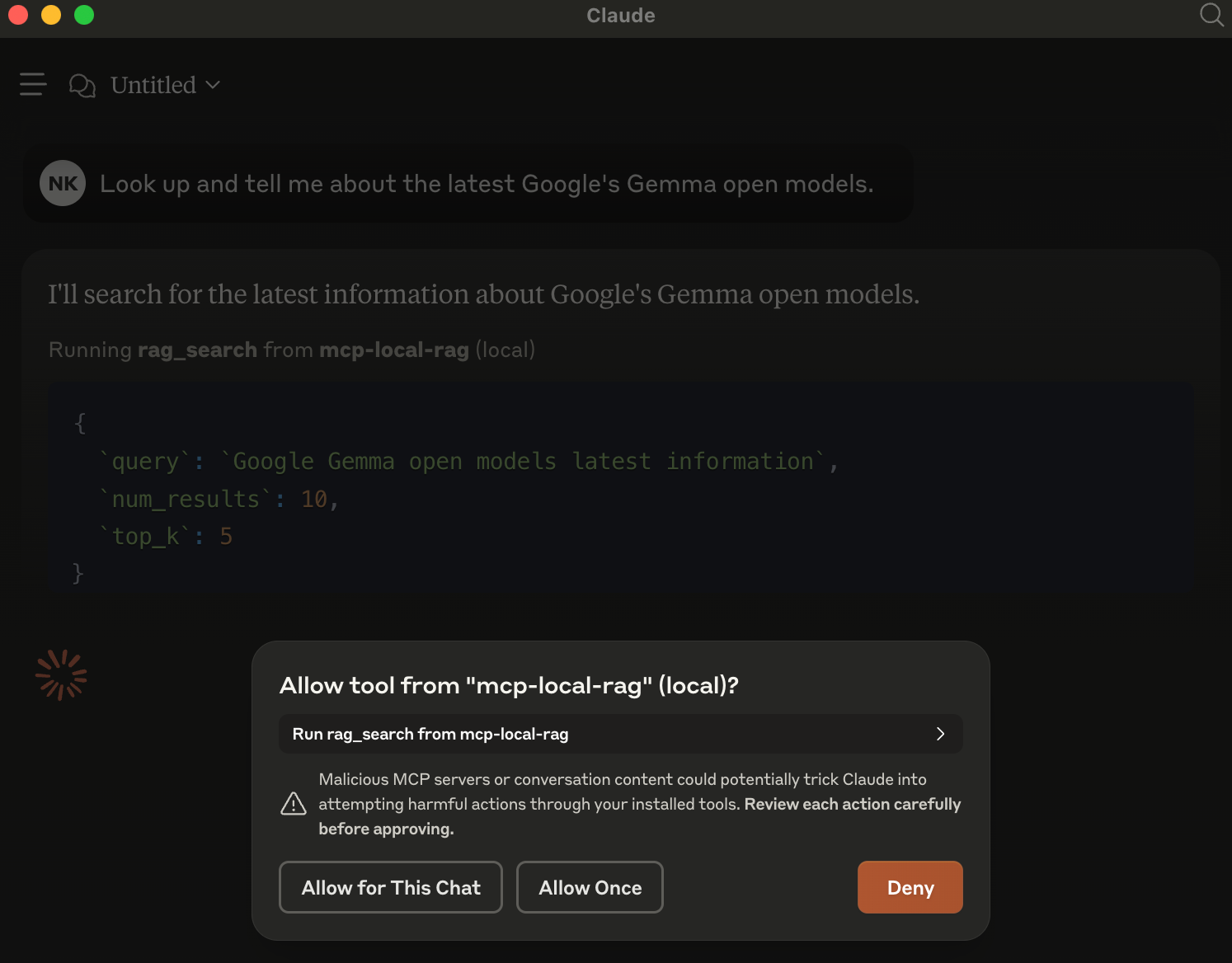

Example use

On prompt

When asked to fetch/lookup/search the web, the model prompts you to use MCP server for the chat.

In the example, have asked it about Google's latest Gemma models released yesterday. This is new info that Claude is not aware about.

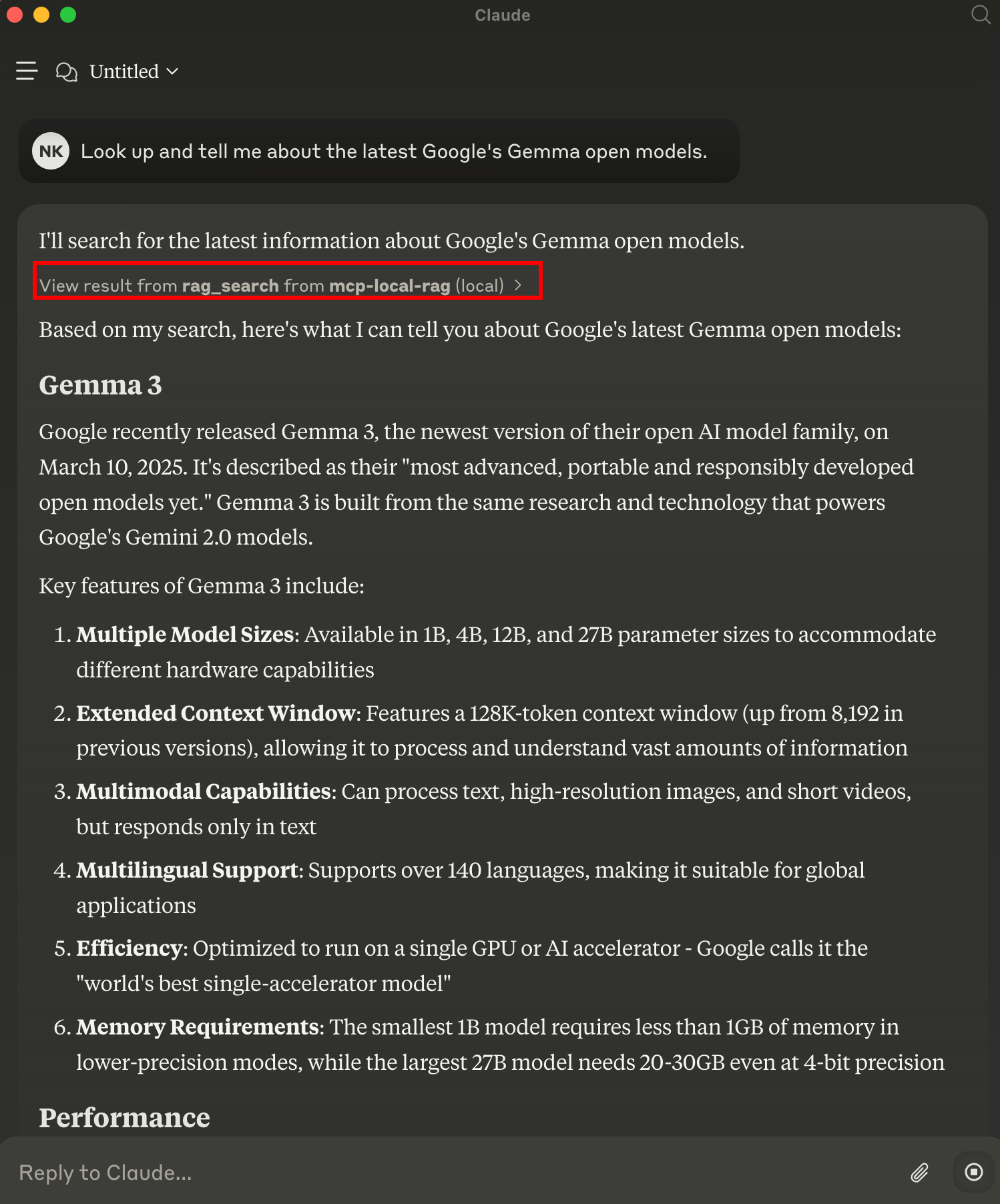

Result

The result from the local rag_search helps the model answer with new info.

Installation

Run locally with the following command:

Terminal

Add the following config to your client:

JSON

{

"mcpServers": {

"mcp-local-rag": {

"env": {},

"args": [

"--python=3.10",

"--from",

"git+https://github.com/nkapila6/mcp-local-rag",

"mcp-local-rag"

],

"command": "uvx"

}

}

}MCPLink

Seamless access to top MCP servers powering the future of AI integration.